By Lisa M. Krieger

The Mercury News

WWR Article Summary (tl;dr) The new analysis offers high-tech proof of that age-old axiom: “A lie will go round the world while truth is pulling its boots on.”

The Mercury News

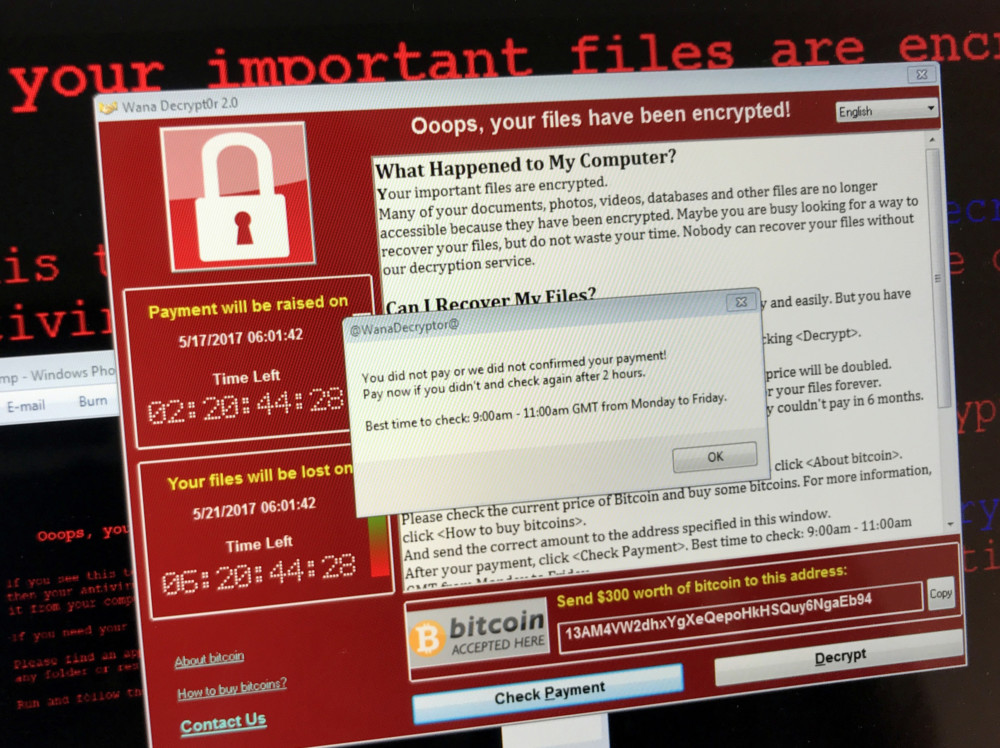

Call it the curse of the Internet age: Lies spread farther, faster, deeper and more broadly than the truth.

In the first detailed analysis of how misinformation spreads through the Twittersphere, researchers at the Massachusetts Institute of Technology found that false news is 70 percent more likely to be retweeted than the truth.

It takes the truth about six times as long as a falsehood to reach 1500 people, they discovered.

Don’t blame the bots. Contrary to conventional wisdom, we’re the ones spreading all the bad stuff, according to the analysis. That’s likely because falsehoods are more novel and click-y than the truth, and we’re more likely to share what’s new.

The research, published Thursday in the journal Science, analyzed about 126,000 stories tweeted by about 3 million people. Twitter provided funding and access to the data.

Misinformation has always been our enemy, since the days when hucksters sold so-called snake oil from their carts.

But social media serves as the currents in which false and misleading news is swept far and wide.

The new analysis offers high-tech proof of that age-old axiom: “A lie will go round the world while truth is pulling its boots on.”

“For us, this is one of the most important issues facing social media today,” said Sinan Aral of the Media Lab of MIT’s Sloan School of Management, who conducted the research with Soroush Vosoughi and Deb Roy.

“We find false news travels far faster further than the truth in every category of information – sometimes by an order of magnitude,” he said. “This has very important effects on our society, our democracy, our politicians, our economy.”

The recent indictment of 13 Russians in the operation of a “troll farm” that spread false information related to the 2016 U.S. presidential election has renewed the spotlight on the power of “fake news” to influence public opinion, and focused attention on the role of social media companies like Twitter and Facebook to monitor their content.

The MIT team analyzed the diffusion of verified true and false news stories via Twitter between 2006 and 2017.

Although considerable attention has been paid to anecdotal analyses of the spread of false news by the media, there haven’t been a large empirical study of the diffusion of misinformation..

The stories were designated as true or false based on six independent fact-checking organizations: snopes.com, politifact.com, factcheck.org, truthorfiction.com, hoax-slayer.com, and urbanlegendsabout.com.

In particular, the team looked at the likelihood that a tweet would create a “cascade” of retweets, creating patterns of repeated clustered conversations.

Whereas the truth rarely diffused to more than 1,000 people, the top 1 percent of false-news cascades routinely diffused to between 1,000 and 100,000 people, they found.

Of the various types of false news, political news was the most virulent, spreading at three times the rate of false news about terrorism, natural disasters, science, urban legends or financial information.

Where the outspoken student survivors of Florida’s recent mass shooting mouthpieces of the FBI? Nope. Pawns of left-wing activist billionaire George Soros? They weren’t. Stooges of the Democratic Party? Not that, either.

But these groundless claims spread like wildfire through the digital ecosystem.

There also was a spike in rumors that contained partially true and partially false information during the Russian annexation of Crimea in 2014.

False rumors have affected stock prices and the motivation for large-scale investments, the team said. For example, after a false tweet claimed that former President Barack Obama was injured in an explosion, the stock market tumbled, wiping out $130 billion in stock value.

The total number of false rumors peaked at the end of both 2013 and 2015 and again at the end of 2016, corresponding to the last U.S. presidential election, the study found, when hyper-partisan anxieties run high. It’s not just a right-wing thing; plenty of conspiratorially minded posts insist that the real story of the Trump administration is criminally nefarious.

Is there a child sex ring at a pizza joint? Nope. Did Pope Francis endorse Donald Trump? Nope. Did Hilary Clinton sells weapons to ISIS. She didn’t. Former President Obama didn’t ban the pledge of allegiance. President Trump didn’t dispatch his personal plane to save 200 starving Marines. Is Trump about to be arrested? No.

“The problem runs deep,” said Dr. David L. Katz, who was not involved in the research. “Cyberspace is the ultimate, ecumenical echo chamber. Everyone can shout into it, and every shout has the same chance to echo from the megaphones of the sympathetic. ”

“News can be quite misleading even when not overtly false,” added Katz, founder of the True Health Initiative, which aims to replace fake health claims and fad diets with reliable and accurate information. “The media have a vested interest in constant titillation, and consistent, reliable information flow does not serve that agenda.”

To probe whether Twitter users were more likely to retweet information that was considered “novel,” the MIT team conducted an additional and rigorous analysis.

The data support a “novelty hypothesis,” they concluded. False news that spreads fast is considered more novel; that novel information is more likely to be retweeted. In addition, false rumors also inspired replies expressing greater surprise, fear and disgust, emotions that could inspire people to share. The truth, on the other hand, inspired greater sadness, anticipation, joy and trust.

This is necessary to counteract the phenomenon’s negative influence on society, the authors said.

In a related article in the journal Science, a professor from Indiana University called for a coordinated investigation into the underlying social, psychological and technological forces behind fake news.

“What we want to convey most is that fake news is a real problem, it’s a tough problem, and it’s a problem that requires serious research to solve,” said professor Filippo Menczer of IU School of Informatics, Computing and Engineering.

The tech companies that create the platforms used to produce and consume information, such as Google, Facebook and Twitter have an “ethical and social responsibility transcending market forces” to contribute to scientific research on fake news, he said.