By David Pierson

Los Angeles Times

WWR Article Summary (tl;dr) Jennifer Stromer-Galley, a professor at Syracuse says, “[Facebook is] so good at being a business, but really bad at recognizing its role in society…It is conceivable the company is so big and complex, there are dimensions and aspects of Facebook no one is paying attention to.”

Los Angeles Times

When it comes to business, Facebook co-founder Mark Zuckerberg is undeniably a visionary.

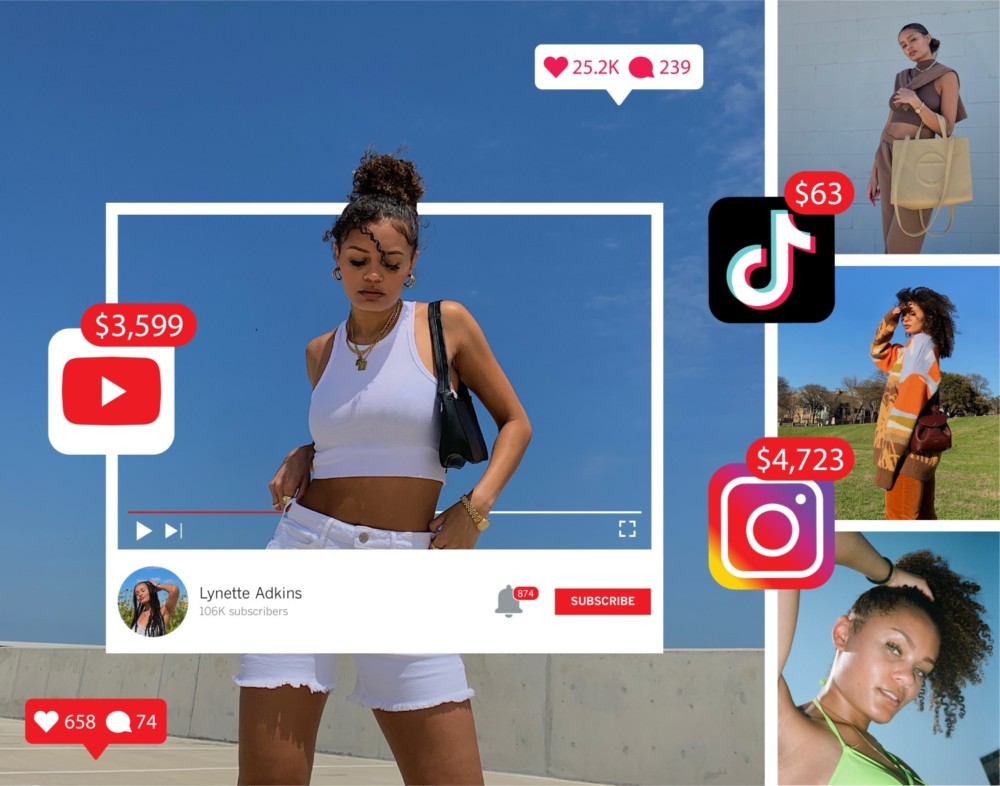

He made a big bet that paid off on photo-sharing app Instagram, charged full bore into mobile when others stood pat, and recognized early on the fortune that could be made in advertising by mining all aspects of his users’ lives down to the square footage of their homes.

But Zuckerberg’s prescient skills seem to waver when the social and cultural intricacies of the real world leak onto his ubiquitous platform.

Defensive at times, like when he initially disputed the premise fake news on Facebook may have influenced the 2016 election, Zuckerberg can come across as someone yet to realize the true power and scope of the platform he built.

In just the last year, Facebook was caught off-guard when a report showed its advertising service allowed audiences to be targeted through offensive labels like “Jew Hater.”

It first denied, then recognized, it was being exploited by Russian propagandists to influence the presidential campaign with fake news and paid ads.

And it was slow to remove terrorist groups from its network as well as anticipate users would livestream murders and other acts of violence.

A company optimized for digital engagement, it turns out, may not have been primed to deal with the darkest aspects of humanity and society.

“They’re so good at being a business, but really bad at recognizing its role in society,” said Jennifer Stromer-Galley, an information studies professor at Syracuse University. “It is conceivable the company is so big and complex, there are dimensions and aspects of Facebook no one is paying attention to.”

“That’s to the detriment of our democracy and our society,” Stromer-Galley continued. “If they can’t start getting on top of these problems, they’re going to start getting regulated.”

Whether Facebook’s public problems are evidence of unintended consequences, shortsightedness or willful blindness is open to debate.

But pressure on the company to get policy (and its algorithms) right will only mount now that it counts a quarter of the world’s population as its users, effectively turning the platform into a digital reflection of society.

A company that started out in Zuckerberg’s dorm room as a “hot or not” program for fellow Harvard students is now being asked to pick sides in the deadly civil war in Myanmar, determine the difference between hate speech and political expression, and adapt its so-called real-name policy to recognize transgender users on the platform.

So far, the company has shown a penchant for mitigating controversies after they arise.

“If we discover unintended consequences in the future, we will be unrelenting in identifying and fixing them as quickly as possible,” Facebook Chief Operating Officer Sheryl Sandberg said in a blog post last week in response to the offensive categories for targeted advertising, a quirk the company says it has fixed.

(Facebook did not respond to a request for an interview for this article.)

Experts say Silicon Valley companies are predisposed to break things first and apologize later, given their trade in cutting-edge technology and new business models.

“One of the most common explanations we hear for the reactive responses, especially in the high-tech industry, centers around the novel and not-well-understood contexts arising from the creation and use of new technologies,” said Valerie Alexandra, a business professor at San Diego State. “Many argue that because the internet and social media platforms are relatively new and evolving contexts, there are still a lot of gray areas with regards to what is legal and illegal and what is ethical and unethical.”

Still, Alexandra said Facebook could do more to envision how a company its size could cause harm.

“Certainly, it’s a known problem that bigger companies often face a problem of becoming slower and more reactive,” she said. “However, bringing over $27 billion in (ad) revenue in 2016, Facebook has a lot of resources to work with.”

Facebook doesn’t have to do it alone. Sometimes it simply has to heed advice.

The Washington Post reported Sunday that President Obama tried to caution Zuckerberg of the critical role his platform was playing in the spread of misinformation during the presidential campaign. Zuckerberg responded by telling Obama that fake news was not widespread on Facebook.

The conversation reportedly took place just over a week after Zuckerberg said the idea that Facebook and fake news influenced the election was “crazy.”

Ten months later, Facebook is something of a poster child for the backlash in Washington against Silicon Valley, a region increasingly caught up in the nation’s culture wars as more Americans question the values coded into the algorithms they use each day.

On Thursday, Facebook caved to pressure to release to Congress 3,000 ads it sold linked to a Russian propaganda operation that the company had resisted sharing.

Calls are now growing louder to regulate Facebook like a media company rather than a platform, a designation that shirks most legal liability for the content that appears on the social network. Some lawmakers are considering a bill that would require more transparency of political ads that run on Facebook.

Facebook said it would disclose backers of political ads on its platform, much like what media companies are already required to do.

“Not only will you have to disclose which page paid for an ad, but we will also make it so you can visit an advertiser’s page and see the ads they’re currently running to any audience on Facebook,” Zuckerberg said in a live broadcast last week.

The move comes months after political scientists said such transparency was critical for political accountability.

Zuckerberg may need to push Facebook to hire more social scientists rather than engineers if he wants to avoid similar controversies in the future, said Sarah T. Roberts, a professor of information studies at UCLA.

“There’s no lack of vision, no end to the creativity of top technology firms to generate revenue,” Roberts said.

“But when it comes to the downside of technology, whether it’s foreign interference or other nefarious uses, their scope of the vision seems to be very much impeded.”