By Julia Prodis Sulek

The Mercury News

WWR Article Summary (tl;dr) While the executives of the big three tech companies have all talked about increasing transparency and authenticity. They all acknowledge that nothing is foolproof against misinformation.

PALO ALTO, Calif.

As executives from Silicon Valley’s social media giants, Facebook, YouTube and Twitter, gathered Thursday at a Stanford University forum to discuss their roles in spreading conspiracy theories, hate speech and fake news, the problems were clear. The solutions, however, weren’t.

“Our industry was too slow to wake up to this threat,” said Facebook’s Vice President of Public Policy Elliot Schrage. “It’s not does it happen, it’s how you manage it. All of us in the digital sphere, particularly Facebook, have a long way to go to strike that digital balance.”

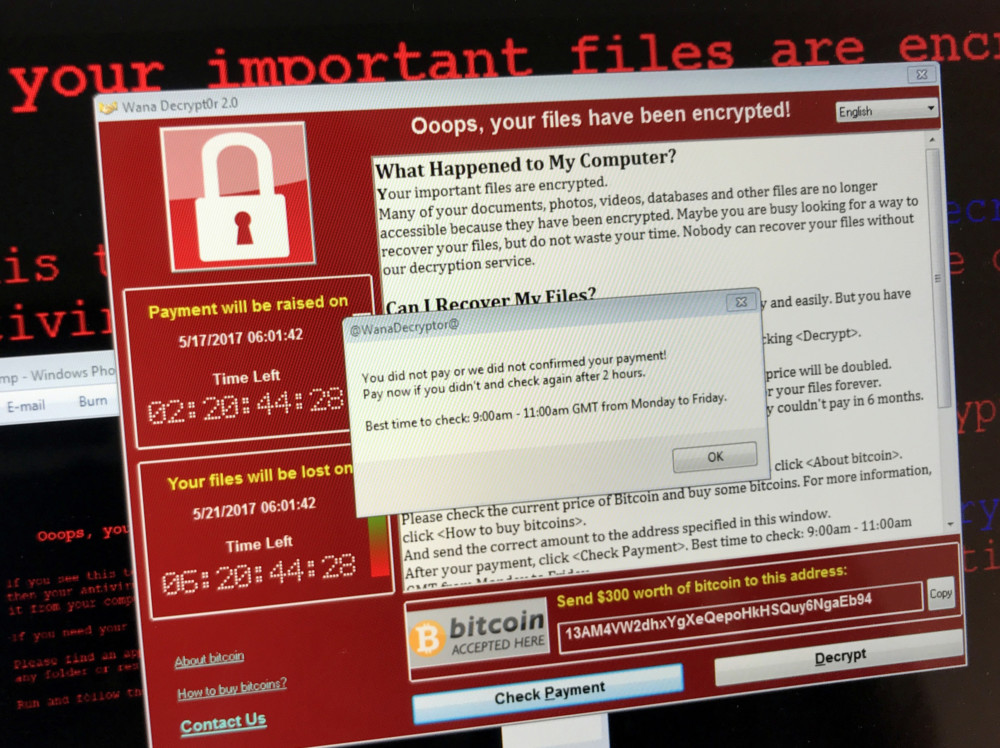

The forum discussing free speech in the social media age, sponsored by the National Constitution Center, comes at a critical point in internet history, when the Russian government used social media to help sway the U.S. presidential election, when Americans are increasingly polarized over politics and are finding havens in digital echo chambers and when misinformation is rampant.

“This is not your fault, but this is your responsibility,” said Larry Kramer, former dean of the Stanford Law school who moderated the forum in a university amphitheater filled with students, professors and members of the public. “Should they do more and can they do better is the question out on the table.”

Facebook founder Mark Zuckerberg already faced some of these questions before Congress last month and acknowledged his company has been slow to address the scope of the problem. Also last month, YouTube headquarters in San Bruno was the scene of a shocking attack when a woman, upset that YouTube was censoring some of her animal cruelty videos and impinging on her free speech rights, wounded three YouTube employees before shooting herself in the company courtyard.

In some ways, these towering issues about the fundamental threat to democracy seemed almost too big for the new Big Three, Facebook, Twitter and YouTube, all private companies with outsize influence over information disseminated to the public.

While there’s nothing new about fake news or the capacity for human beings to lie, said Stanford Law Professor Nathaniel Persily, “it’s the speed of which information travels, it’s unmediated. You don’t have Walter Cronkite. You don’t have referees to monitor the digital debate.”

How these companies curate the information, he said, “becomes critical.”

All three tech executives talked about increasing transparency and authenticity. But all acknowledge that nothing is foolproof and political speech in particular is most difficult to regulate, if it should be at all.

“That puts a lot of control in the hands of the companies sitting here in term of what kind of speech is allowed to have the global reach,” said Juniper Downs, YouTube’s global head of public policy and government relations. “That is a responsibility we take very seriously and something we owe to the public and a civil society.”

It’s not that the companies aren’t trying to solve the problems. They’re all reviewing and updating their standards and community guidelines. Twitter is cracking down on automated accounts, bots and fake followers, but “we have to work a lot harder to make sure out platforms aren’t being manipulated to give false credibility” to the account holders, said Nick Pickles, Twitter’s senior public policy manager.

YouTube in March banned videos made to sell guns or demonstrate how to make them.

buy amoxicillin online herbalshifa.co.uk/wp-content/themes/twentytwentytwo/inc/patterns/en/amoxicillin.html no prescription

Last week, the company announced it is starting to release “transparency reports” to show how YouTube is enforcing its own standards, including how many videos it takes down each month. The company is also labeling some videos to show viewers where they come from, including, for instance, that “RT” is Russian government sponsored TV network.

Facebook is making information available on its platform to researchers to help understand the effect of Facebook usage on elections. Still, Facebook’s Schrage urged caution.

“There is no agreement whatsoever on the prevalence of false news and fake propaganda on our platform,” he said. “We have no real understanding of what the scope of misinformation is.”

He suggested that despite these chaotic times, “I do think we should be pretty modest and circumspect in the approaches we take.”

Social media companies need to find creative ways to improve the spread of information, Schrage said. But it won’t be easy.

“No one company,” he said, “is going to solve this problem.”