By Matt Day

The Seattle Times

WWR Article Summary (tl;dr) After a Seattle Times report revealed that searches for at least a dozen of the most common female names in the U.S., included a note asking users if they had meant to look for a predominantly male name, Linkedin has changed its algorithm.

SEATTLE

LinkedIn has changed the way it generates search results to remove prompts that had asked people who searched for some common female names if they meant to look for similar-sounding male names instead.

The professional social networking site has rolled out a change to its search algorithm designed to recognize when a person searches for another user’s full name, and doesn’t try to prompt them to search for another one, spokeswoman Suzi Owens said in an email.

The changes follow a Seattle Times report that found that, in searches for at least a dozen of the most common female names in the U.S., LinkedIn’s results included a note asking if users had meant to look for a predominantly male name instead.

A search for “Stephanie Williams” brought up a prompt asking if the searcher meant “Stephen Williams.” The site similarly suggested changing Andrea to Andrew, Danielle to Daniel, and Michaela to Michael, among others.

Searches for the 100 most common male names in the U.S. didn’t bring up prompts to switch the search to more commonly female names.

LinkedIn, which Microsoft is buying for $26.2 billion, had said that the prompts were generated automatically, based on software designed to predict words or names users were looking for based on past searches on the site.

After a change to the software, Owens said, the search algorithms are designed to explicitly recognize people’s names, and refrain from trying to correct them with another name.

LinkedIn’s female-to-male name prompts came as some researchers and software engineers warn that artificial intelligence algorithms like the kind that underlie search engines are susceptible to human biases.

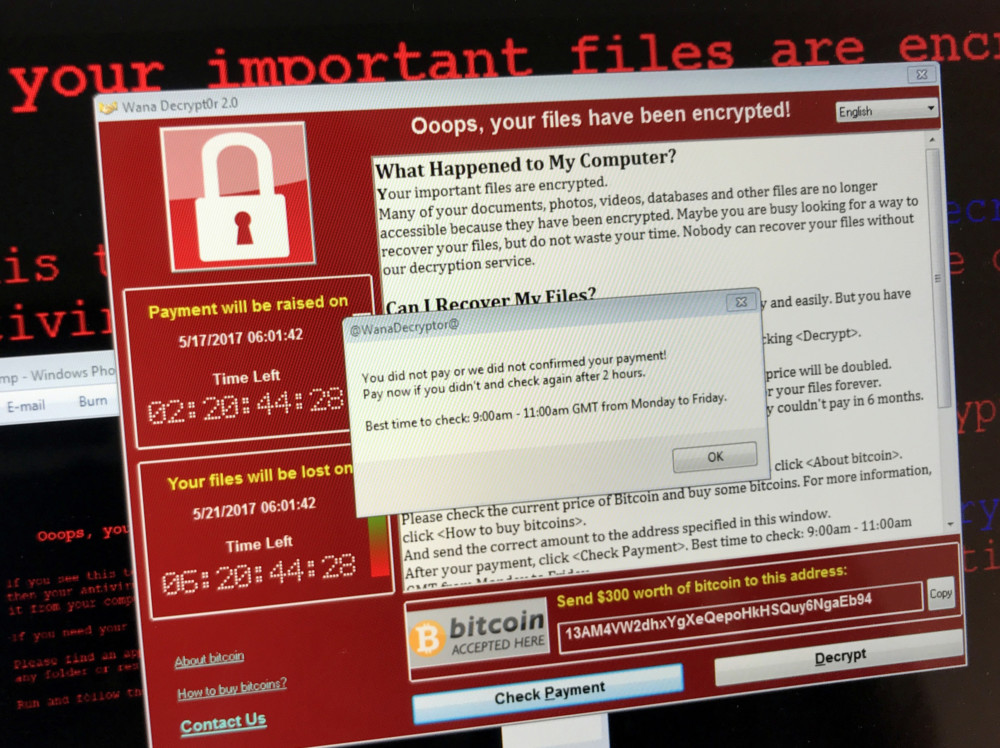

A recent string of notable cases of ostensibly impartial algorithms gone awry, including a Microsoft chatbot that was taken offline after it was provoked into racist and sexist slurs, have shone a spotlight on the industry.

Critics say that the homogeneity of developers plugging away on machine intelligence, an overwhelmingly male-dominated group, may be contributing to technology that contains biases.