By Ethan Baron

The Mercury News

WWR Article Summary (tl;dr) As Ethan Baron reports, “Rapid advances in AI have sparked growing concern about the ethics of allowing algorithms to make decisions, the possibility that the technology will replace more jobs than it creates, and the potentially harmful results algorithms can produce when their input includes human bias.”

The Mercury News

Artificial intelligence will unleash changes humanity is not prepared for as the technology advances at an unprecedented pace, leading experts told an audience at the official opening Monday of Stanford University’s new AI center.

At a day-long symposium accompanying the center’s launch, speakers from Microsoft co-founder and philanthropist Bill Gates to former Google AI chief Fei-Fei Li and a host of other leaders in the field laid out the promise of AI to transform life for the better or, if appropriate measures are not taken, for the worse.

The Stanford Institute for Human-Centered Artificial Intelligence, a cross-disciplinary research and teaching facility dedicated to the use of AI for global good, needs to educate government along with students, Gates said during his keynote speech.

“These AI technologies are completely done by universities and private companies, with the private companies being somewhat ahead,” Gates told the audience. “Hopefully things like your institute will bring in legislators and executive-branch people, maybe even a few judges, to get up to speed on these things because the pace and the global nature of it and the fact that it’s really outside of government hands does make it particularly challenging.”

Gates said AI can speed up scientific progress. “It’s a chance, whether it’s governance, education, health, to accelerate the advances in all the sciences,” Gates said.

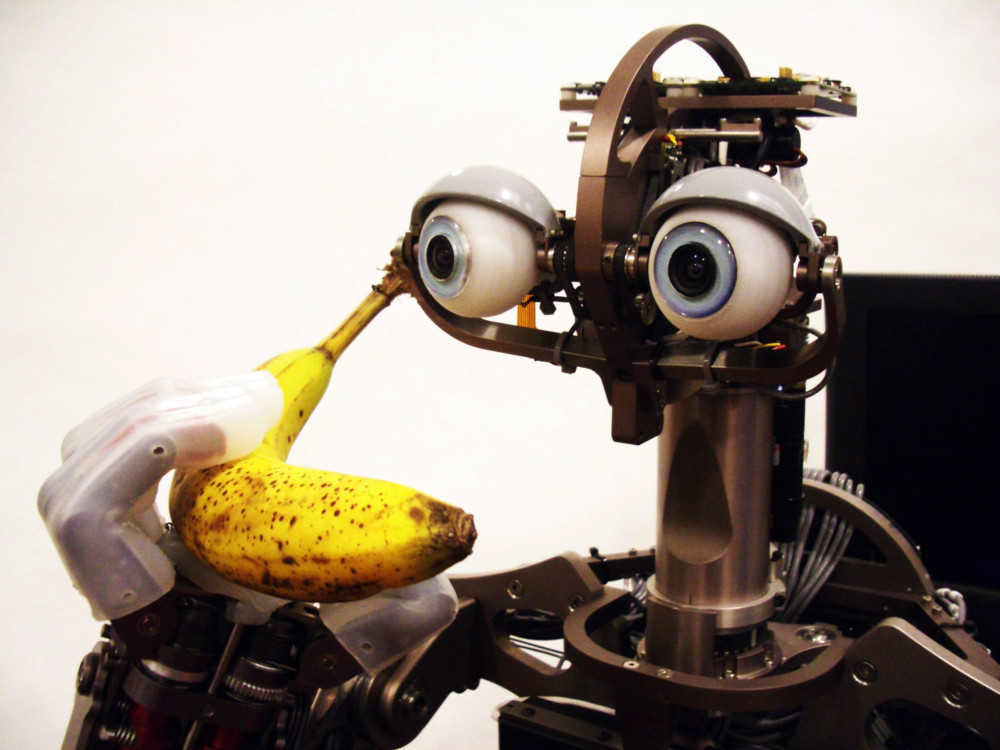

Artificial intelligence is, essentially, algorithm-based software that can “see,” “hear” and “think” in ways that often mimic human processes, but faster and theoretically, more accurately.

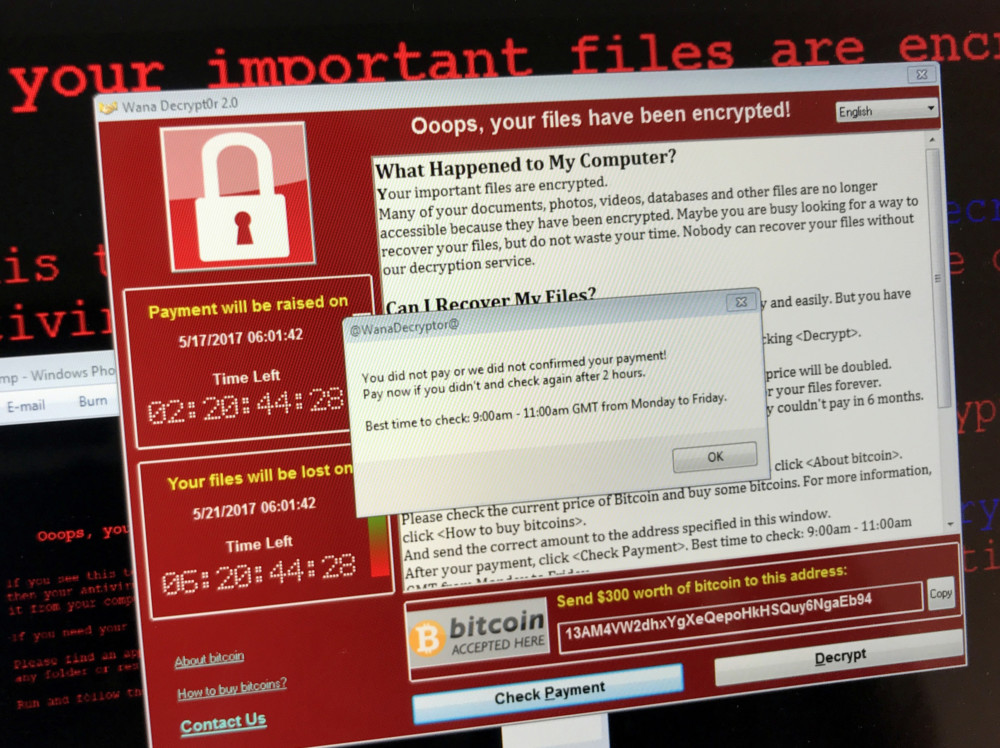

However, rapid advances in AI have sparked growing concern about the ethics of allowing algorithms to make decisions, the possibility that the technology will replace more jobs than it creates, and the potentially harmful results algorithms can produce when their input includes human bias.

“This is a unique time in history, we are part of the first generation to see this technology migrate form the lab to the real world at such a scale and speed,” institute co-director Li told the audience. But, she said, “intelligent machines have the potential to do harm.” Possible pitfalls include job displacement, “algorithmic bias” that results from data infected by human prejudices, and threats to privacy and security.

“This is a technology with the potential to change history for all of us. The question is, ‘Can we have the good without the bad?'” she said.

That question remains to be answered, said Susan Athey, a professor of the economics of technology at the university’s business school. “If we knew all the answers we wouldn’t need to found the institute,” Athey said in an interview. “We’re trying to grapple with big questions that no discipline has monopoly over. What we want to do is make sure we get the greatest minds studying these questions.”

Those minds will come from Stanford schools and departments including computer science, medicine, law, economics, political science, biology, sociology and humanities.

The inter-disciplinary structure of the institute will allow researchers, students and instructors to explore the effects of AI on human life and the environment, symposium speakers said. “AI should be inspired by human intelligence, but its development should be guided by its impact,” said university president Marc Tessier-Lavigne.

Because the facility is located at a university, students and faculty can create collaborations that “allow people to learn about AI while actually improving the social good,” Athey said.

Areas ripe for AI-boosted development include medicine, climate science, emergency response, governance and education, speakers said. The technology promises to augment human intelligence, helping doctors diagnose illness or helping teachers educate children, speakers said.

Still, AI in many ways falls far short of human intelligence, the symposium heard. While the technology can be applied generally across many fields, its usefulness is, so far, very narrow.

“It does only one thing,” said Jeff Dean, Google’s head of AI. “How do we actually train systems that can do thousands of things, tens of thousands of things? How do we actually build much more general systems?”

Another leader in America’s AI field, MIT professor Erik Brynjolfsson, highlighted the potential prosperity the technology may deliver, if humans can keep up with the pace of change it creates.

“The first-order effect is tremendous growth in the economic pie, better health, ability to solve so many of our societal problems. If we handle this right, the next 10 years, the next 20 years, should be, could be, the best couple of decades that humanity has ever seen,” Brynjolfsson said.

“The fact that there’s no economic law that everyone benefits means that we need to be proactive about thinking about how we make this shared prosperity. The challenge isn’t so much massive job loss, it’s more a matter of poor-quality jobs and uneven distribution.”

Currently, companies are focusing on using AI to perform certain tasks, and work based on such tasks is disappearing, Brynjolfsson said.

“The problem is that human skills, human institutions, business processes change much more slowly than technology does,” he said. “We’re not keeping up. That’s why this human-centered AI initiative is so important. How can we adapt our economics, our laws, our societies? Otherwise, we’re going to be facing more of the unintended consequences.”

Fewer than a dozen companies are large and powerful enough to use AI broadly, and research is concentrated within a handful of countries, said Kate Crawford, co-director of the AI Now Institute at New York University.

“It’s really a small group of people who really have their hands on the levers of power,” Crawford said. “When we start to talk about what that looks like geopolitically, it actually starts to look very concerning.”

Keeping developers of AI accountable for what their technology does is a challenge so far unmet, said Tristan Harris, executive director of the Center for Humane Technology and the former design ethicist at Google. “If Google and Facebook are funding the majority of AI research, then that presents a lot of huge problems,” Harris said. “We need structural accountability.”